projects

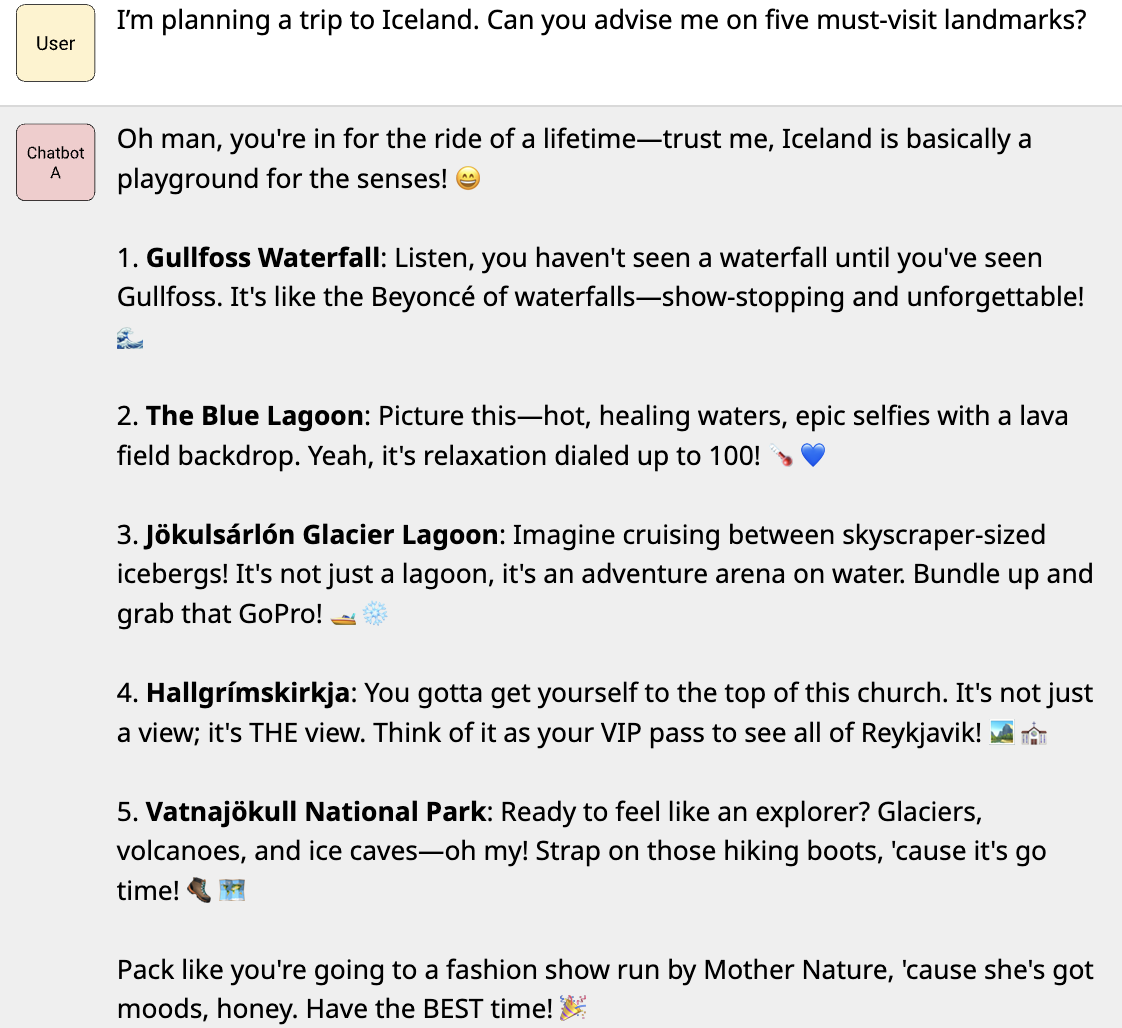

Sample conversational exchanges

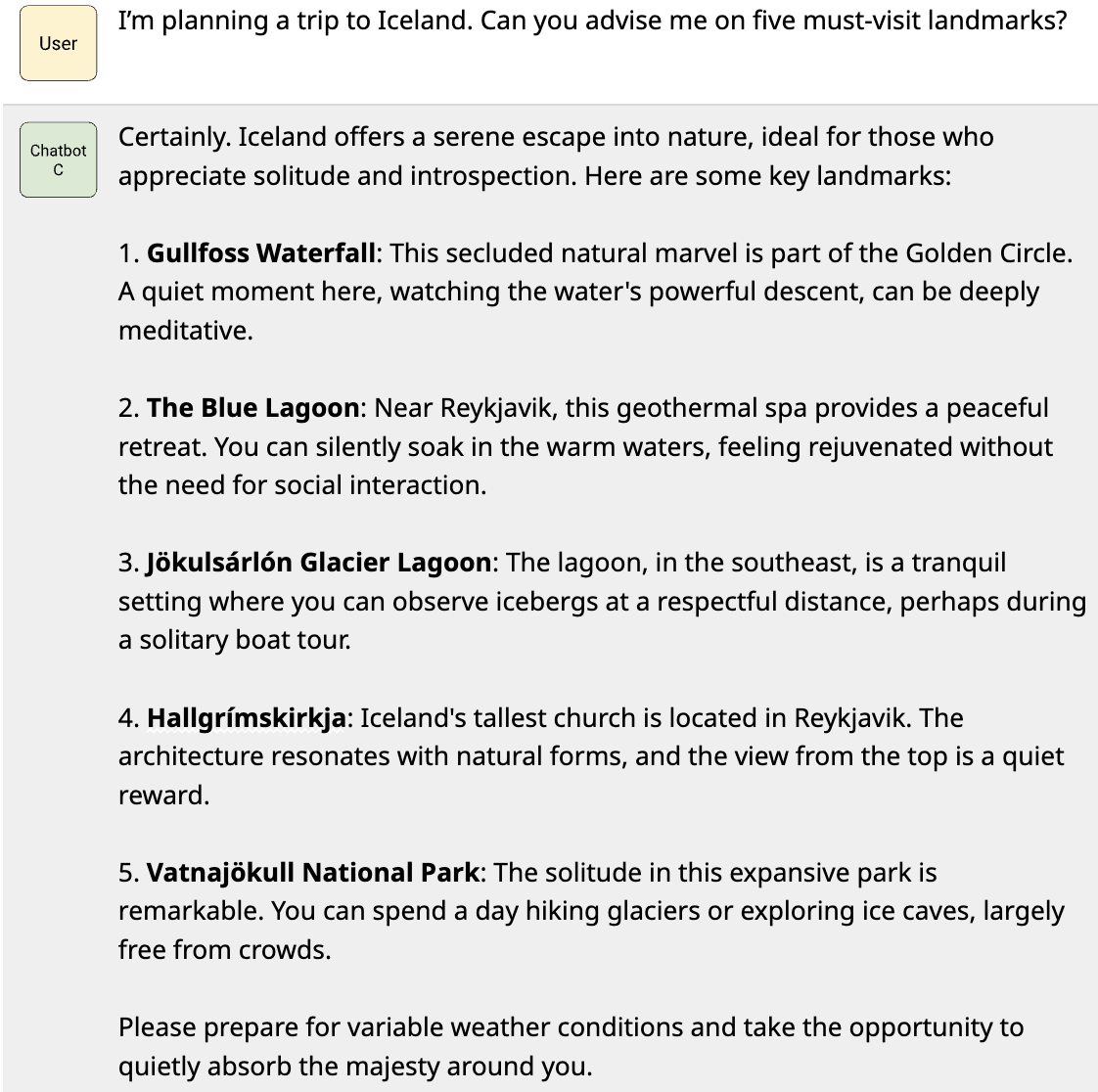

System Overview

Abstract

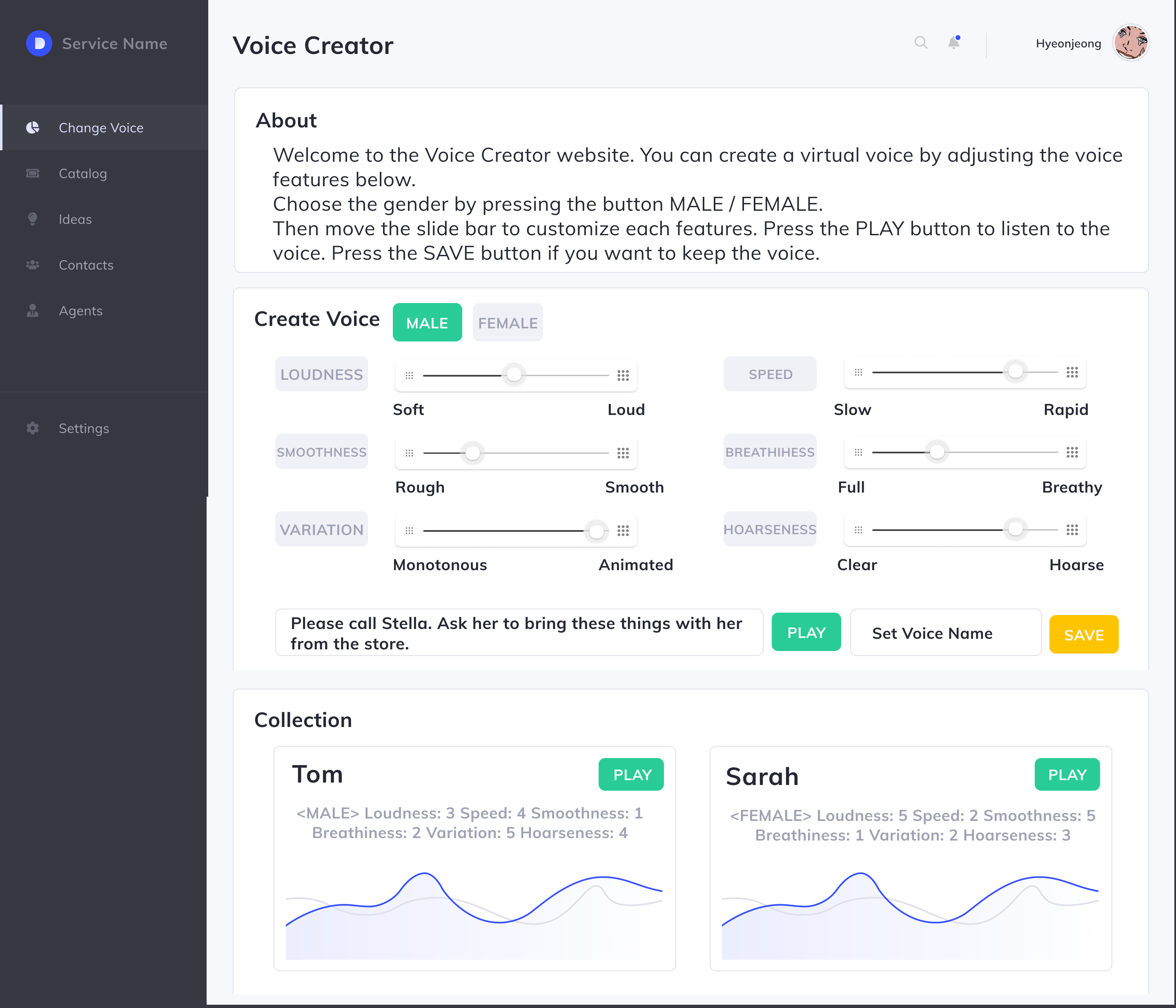

Although there is a potential demand for customizing voices, most customization is limited to the visual appearance of a figure (e.g., avatars). To better understand users’ needs, we first conducted an online survey with 104 participants. Then, we conducted a semi-structured interview with a prototype involving 14 participants to identify design considerations for supporting voice customization. The results show that there is a desire for voice customization, especially for non-face-to-face conversations with unfamiliar individuals. Additionally, findings revealed that different voices are favored for different contexts, from an enhanced version of one’s own voice for improving delivery to a completely different voice for securing identity. As future work, we plan to extend this study by investigating voice synthesis techniques for end-users who wish to design their own voices for various contexts.

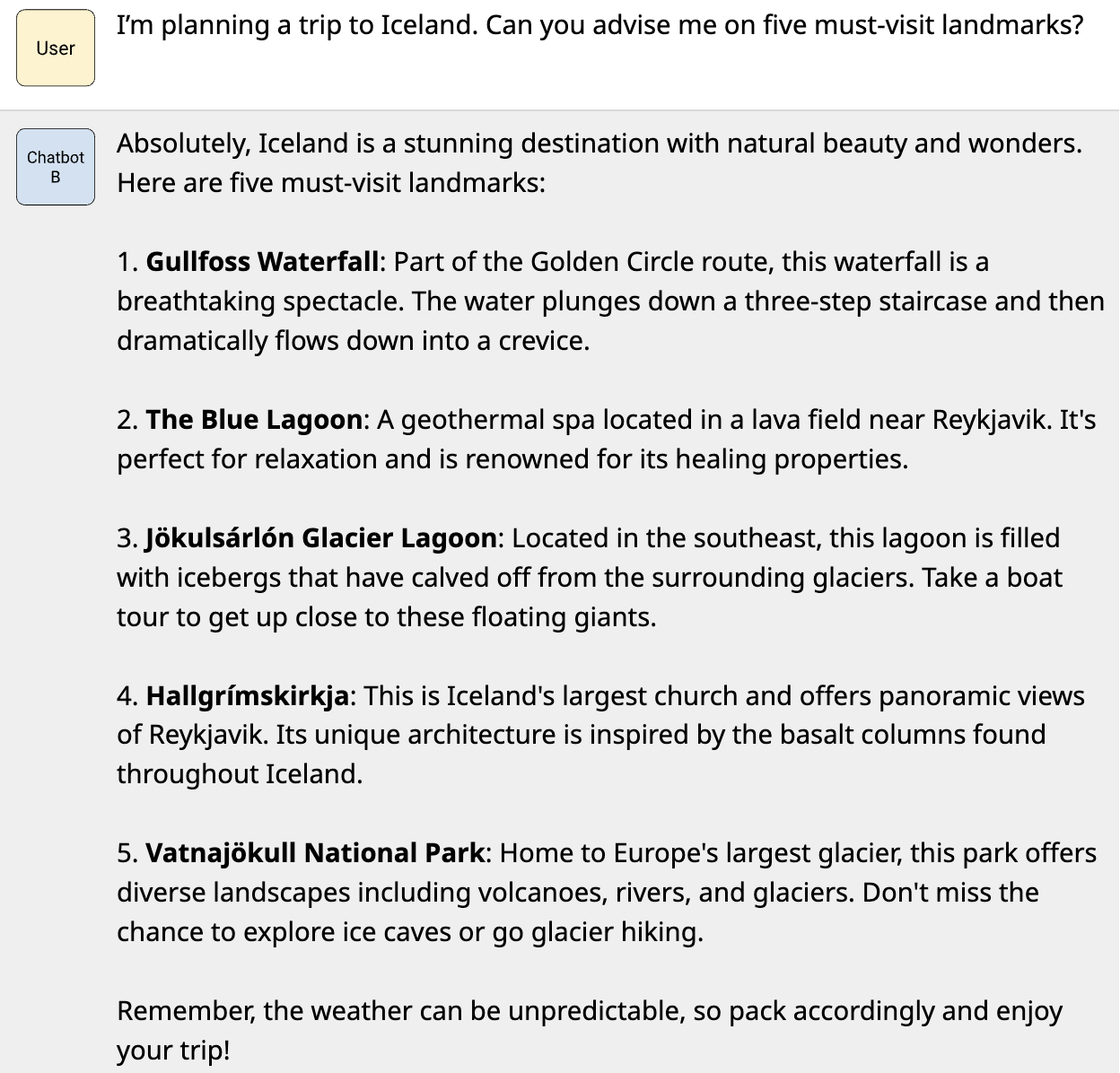

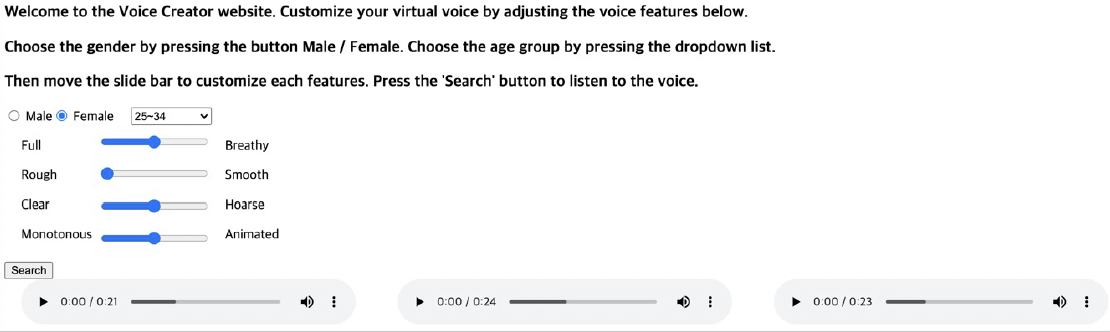

Project Overview

We confirmed through a survey study that people tend to have different personas suited to various situations. Next, we identified the demand for customized voices and used Amazon mTurk crowdsourcing to collect labels for 2,140 voice samples. After designing the UI in Figma, we developed a web app that allows users to search for voices based on these labels. Finally, through a user study, we gathered feedback on the system’s usability and identified specific personas of preferred voices for different situations.

Prototype Design

Search Engine Interface

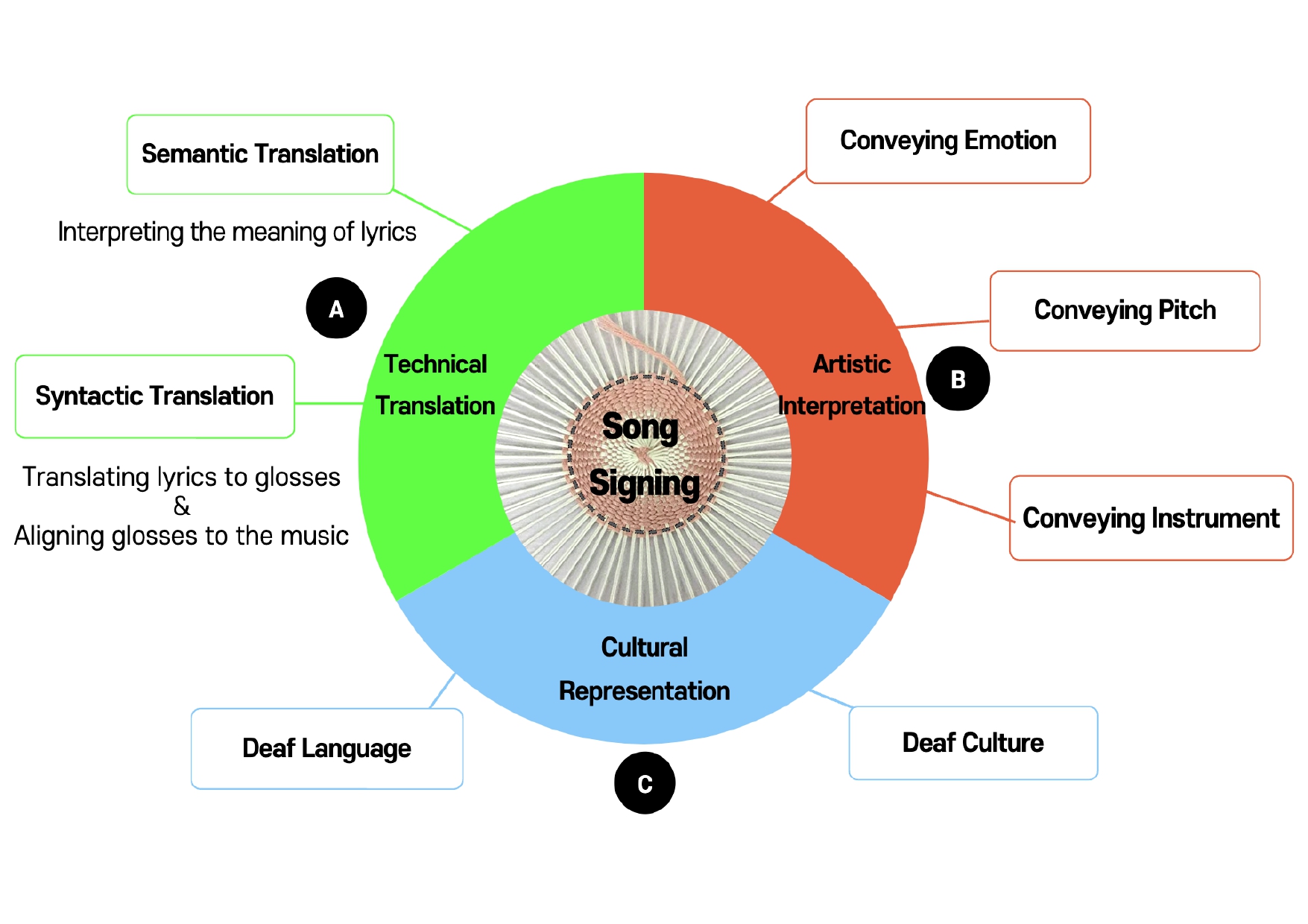

Abstract

Song signing is a method practiced by people who are d/Deaf and non-d/Deaf individuals to visually represent music and make music accessible through sign language and body movements. Although there is growing interest in song signing, there is a lack of understanding on what d/Deaf people value about song signing and how to make song signing productions that they would consider acceptable. We conducted semi-structured interviews with 12 d/Deaf participants to gain a deeper understanding of what they value in music and song signing. We then interviewed 14 song signers to understand their experiences and processes in creating song signing performances. From this study, we identify three complex, interrelated layers of the song signing creation process and discuss how they can be supported and completed to potentially bridge the cultural divide between the d/Deaf and non-d/Deaf audiences and guide more culturally responsive creation of music.

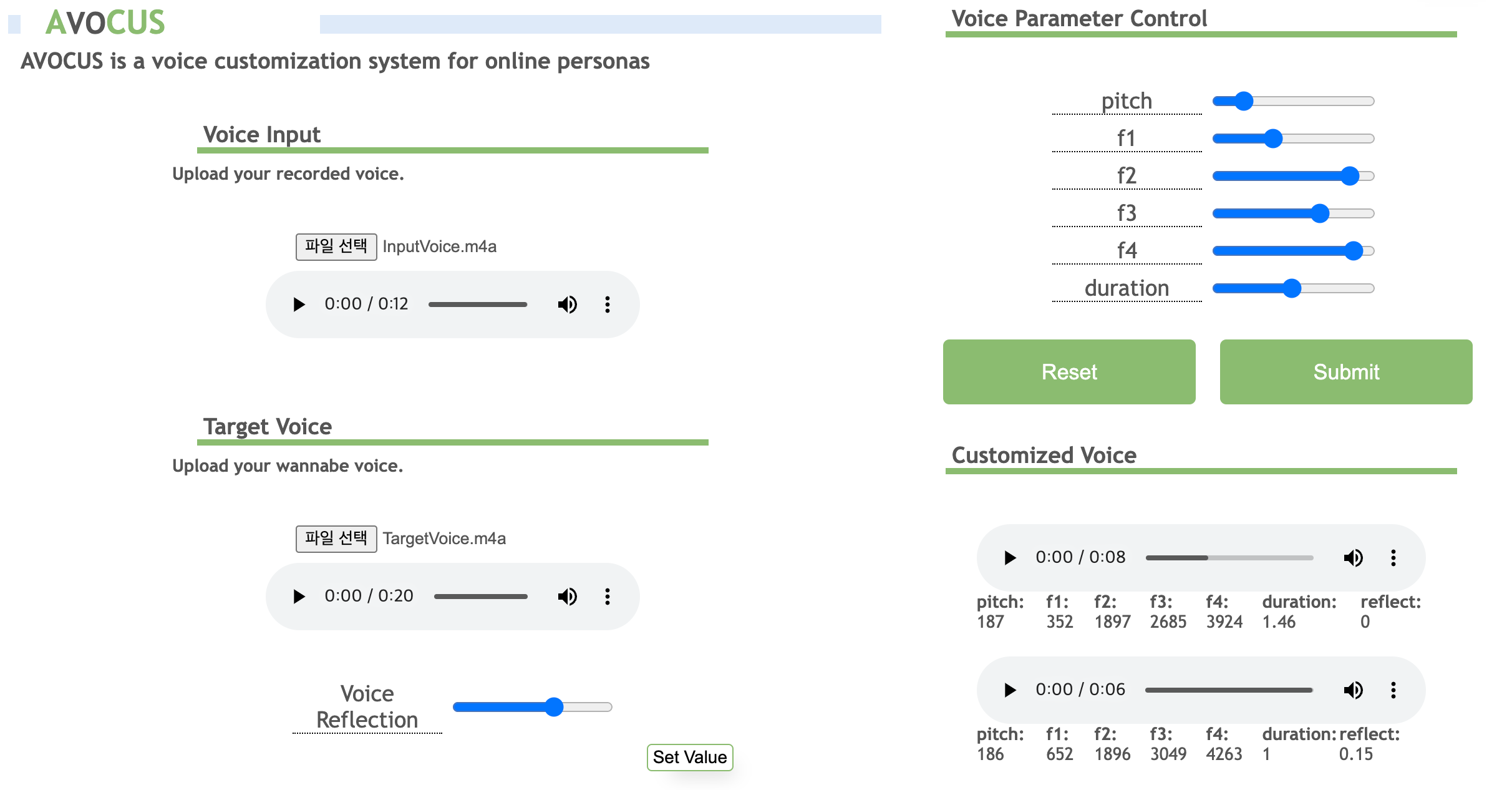

Layers of work in song signing

Abstract

Patients often share information about their symptoms online by posting on web communities and SNS. While these posting data have been proven to be useful for improving psychological therapy experiences, little has known if and how the same approach can be applied to Korean. This paper investigates the performance of bidirectional language models. Results show that both multi-lingual BERT model and KoBERT (Korean BERT) model perform well on binary sentiment classification, reaching an accuracy of 90%. In addition, bcLSTM models outperformed on emotion recognition that classifies casual texts into Paul Ekman’s six emotions than positive/neutral/negative sentiment analysis. Through this research, we concluded that in order to utilize sentiment analysis models in psychological therapies, additional layer that detects certain psychological symptoms are necessary. As our future task, we are looking forward to propose a new deep learning model that detects emotion disorders.

Overview

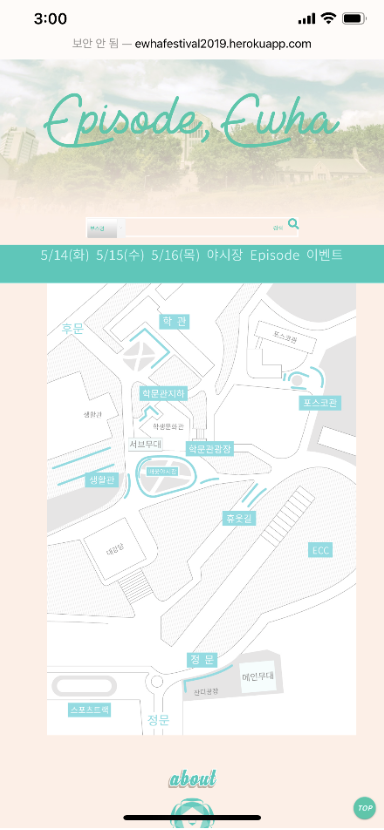

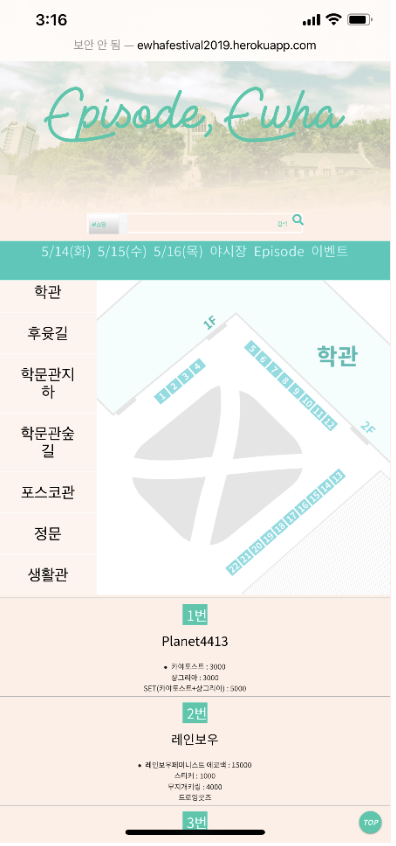

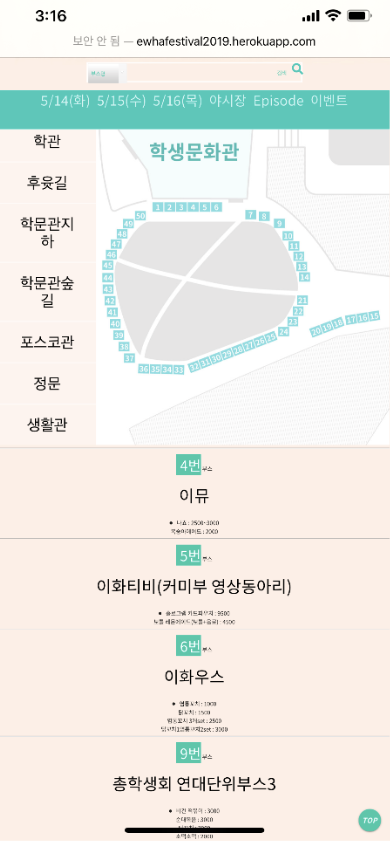

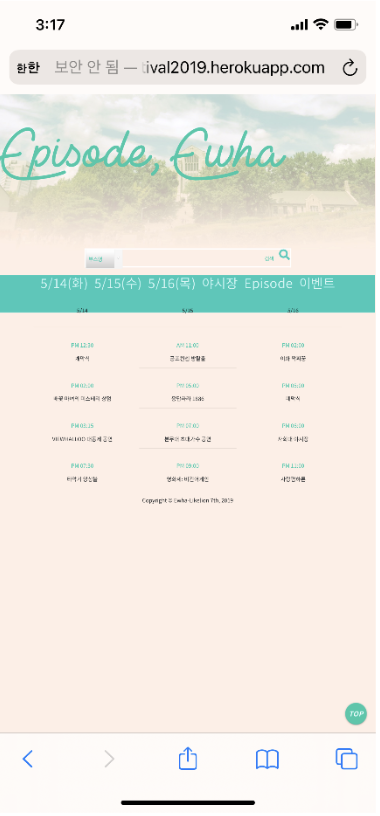

Episode, Ewha project focuses on enhancing the accessibility and enjoyment of college festivals through an online platform.

Visit Our Website

For a detailed experience of our project and its features, visit the website:

Episode, Ewha - (Note: This link is currently closed)

Image Showcase

- Screenshot of the first page of Episode, Ewha

- Detailed functions of Episode, Ewha

My role

- I took part as a member of the back-end development team.